I am a PhD candidate at the School of Computing Technologies in RMIT University.

My current research work is focused on regulatory technologies in blockchain systems. Specifically, I am exploring how to ensure legal compliance at the smart contract level.

Outside academic work, I am interested in photography and linguistics. I am a huge fan of The Malazan Book of the Fallen series by Steven Erikson.

Research Work

Currently, I am conducting research under the guidance of Dr. Hai Dong and Prof. Zahir Tari at RMIT, and Dr. H.M.N. Dilum Bandara at CSIRO's Data61.

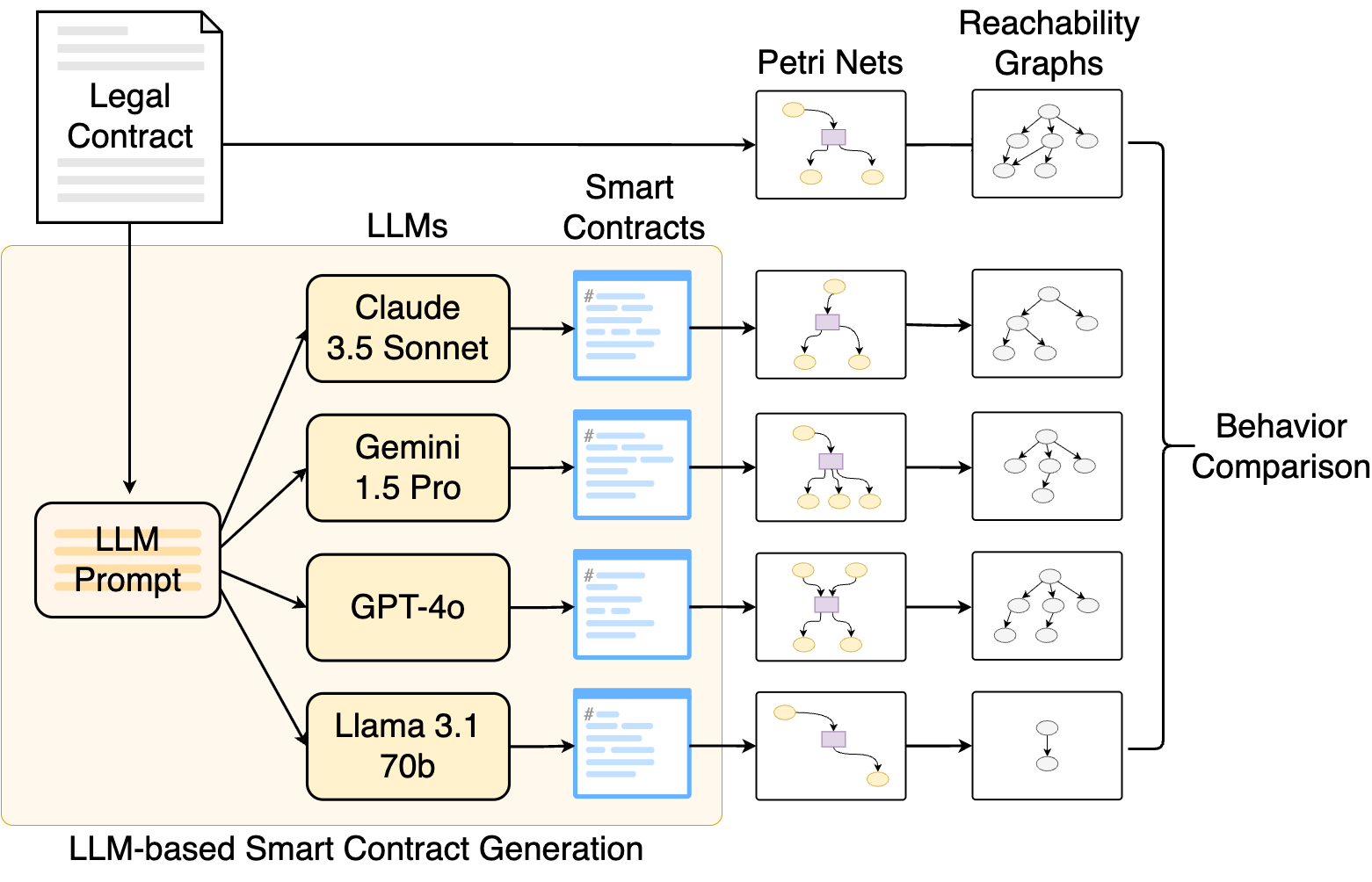

Legal Compliance Evaluation of Smart Contracts Generated By Large Language Models

Update (2025/06/06): We won the Best Paper award at ICBC 2025! 🥳

Smart contracts can implement and automate parts of legal contracts, but ensuring their legal compliance remains challenging. Existing approaches such as formal specification, verification, and model-based development require expertise in both legal and software development domains, as well as extensive manual effort.

Given the recent advances of Large Language Models (LLMs) in code generation, we investigated their ability to generate legally compliant smart contracts directly from natural language legal contracts, addressing these challenges. We propose a novel suite of metrics to quantify legal compliance based on modeling both legal and smart contracts as processes and comparing their behaviors. We selected four LLMs, generated 20 smart contracts based on five legal contracts, and analyzed their legal compliance. We found that while all LLMs generate syntactically correct code, there is significant variance in their legal compliance with larger models generally showing higher levels of compliance.

We also evaluated the proposed metrics against properties of software metrics, showing they provide fine-grained distinctions, enable nuanced comparisons, and are applicable across domains for code from any source, LLM or developer. Our results suggest that LLMs can assist in generating starter code for legally compliant smart contracts with strict reviews, and the proposed metrics provide a foundation for automated and self-improving development workflows.

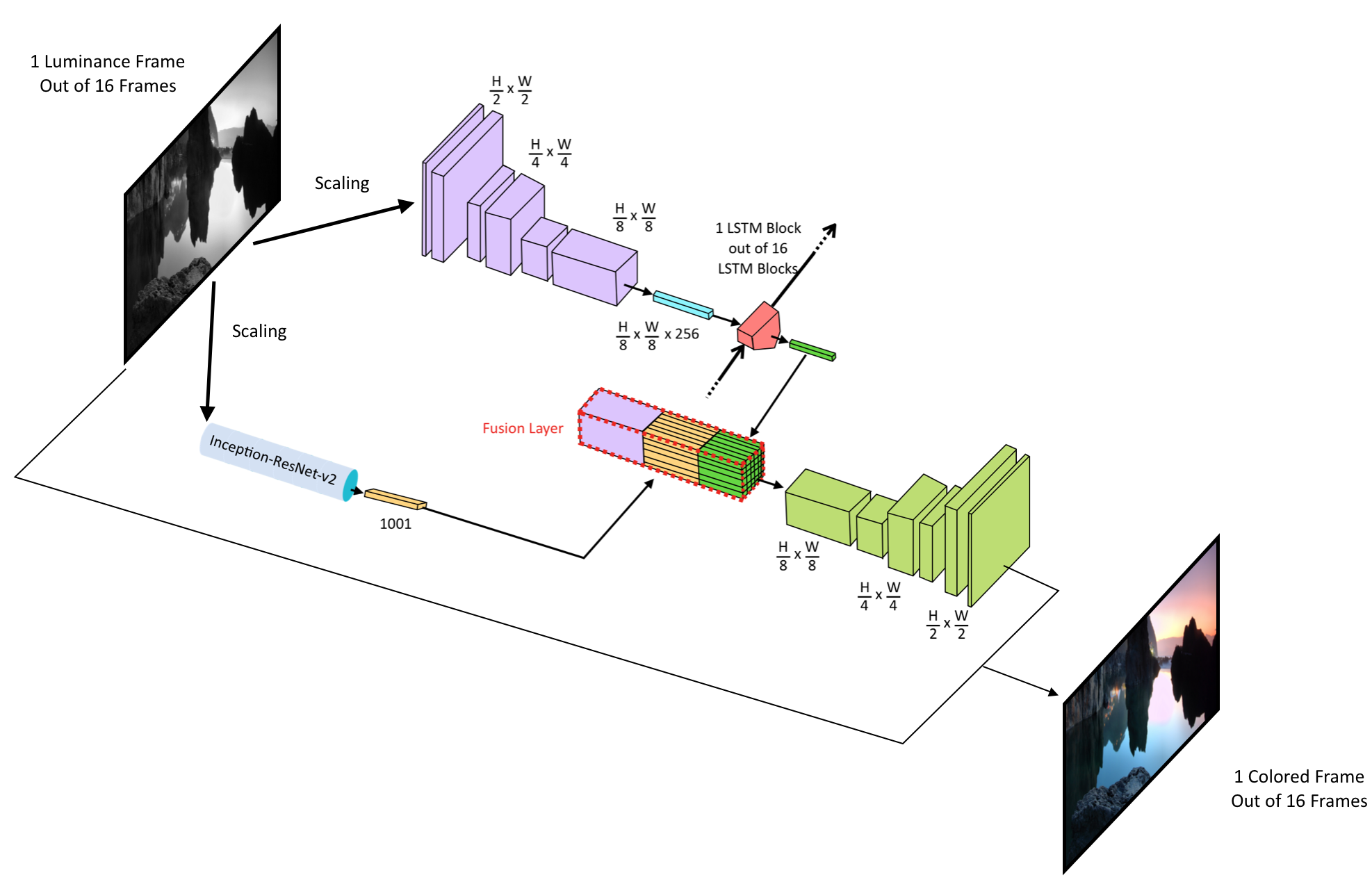

FlowChroma - A Deep Recurrent Network for Video Colorization

We developed an automated video colorization framework that minimizes the flickering of colors across frames. If we apply image colorization techniques to successive frames of a video, they treat each frame as a separate colorization task. Thus, they do not necessarily maintain the colors of a scene consistently across subsequent frames.

The proposed solution includes a novel deep recurrent encoder-decoder architecture which is capable of maintaining temporal and contextual coherence between consecutive frames of a video. We use a high-level semantic feature extractor to automatically identify the context of a scenario, with a custom fusion layer that combines the spatial and temporal features of a frame sequence.

Video Colorization Dataset & Benchmark

Compared to its peer, image colorization, video colorization is a relatively unexplored area in computer vision. Most of the models available for video colorization are extensions of image colorization, and hence are unable to address some unique issues in video domain.

In this project, we evaluated the applicability of image colorization techniques for video colorization, identifying problems inherent to videos and attributes affecting them. We developed a dataset and benchmark to measure the effect of such attributes to video colorization quality and demonstrate how our benchmark aligns with human evaluations.

Last updated: June 2025